CNS 2015 in Prague: Notes

Right after ICML 2015 I got to visit Computational Neuroscience meeting in Prague. Impressed by the advances in deep learning and AI field in general I was eager to see how NS could benefit. This post served as a notebook during the conference and is biased towards trying to find applications of DL in the field of NS.

In general this meeting seems to be quite modeling-oriented. Compared to Bernstein meeting I attended last year CNS has less diversity in topics, which makes it probably good for people interested in their particular field, but leaves less space for more general ideas. In here the “computational” part seems to be more like a tool, and rarely it is an aim on its own.

Day 1

Learning and variability in birdsong by Adrienne Fairhall.

Computational model uses STDP in reinforcement learning paradigm implemented in a simple shallow neural net. To note: STDP as a core of a learning algorithm once again.

Day 2

Wilson-Cowan Equations for Neocortical Dynamics by Jack Cowan.

Quite interesting history lesson about McCulloch, Pitts, von Neumann, Shannon. Wilson-Cowan equation — a model for neuronal populations.

Limit of scalability of neural network models by Sacha van Albada.

Typical correlations in networks are 0.01-0.25. Looked at downscaling.

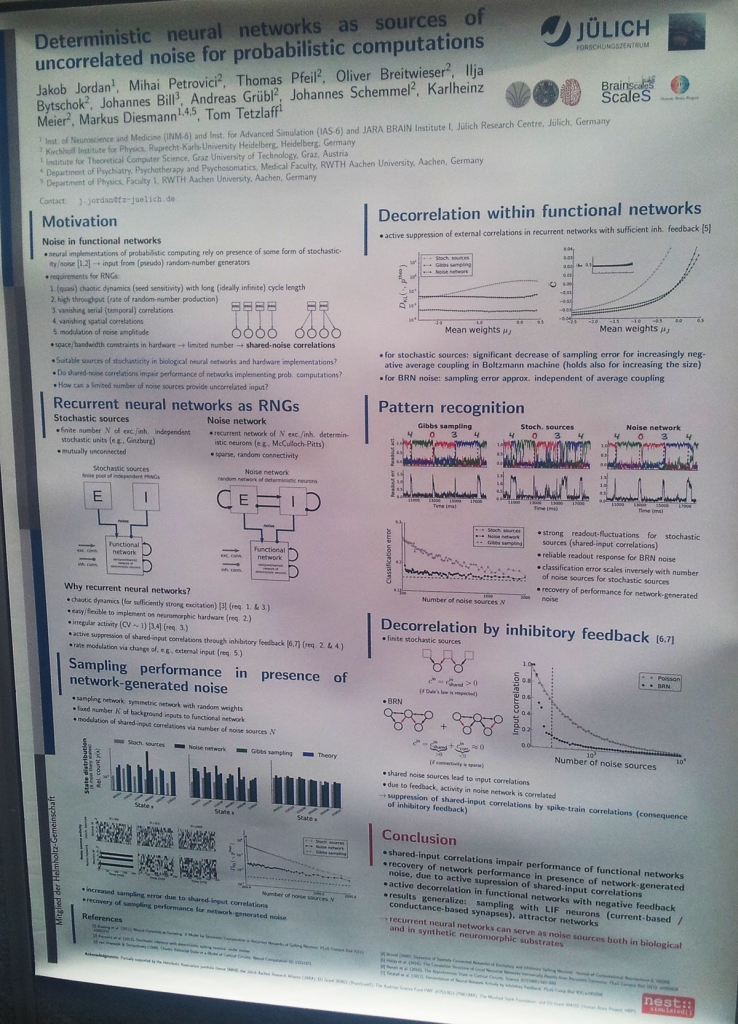

High-conductance state in prediction of LIF neurons and sampling. by Mihai A. Petrovici.

LIF (Leaky Integrate-and-Fire)-based Boltzmann machines. 96.7 on MNIST (95 is kNN, 99.5 with CNN). Implemented on the “Spikey” neuromorphic chip.

Quantifying the distance to criticality under subsampling by Jens Wilting, Viola Priesemann.

Subsampling means just that we are limited in how much we can measure. Criticality maximizes information processing in networks. Supercritical — epilepsy. Brain does not operate on critical threshold, but in subcritical region. They want to measure the distance from the brain operation mode to criticality and overcome limits of subsampling the process. Distance measure: branching network, branching factor: \(\sigma < 1[/latex] -- subcritical, [latex]\sigma = 1[/latex] -- ciritcal, [latex]\sigma > 1\) — supercritical. Multistep Linear Regression estimator from sample instead of conventional extrapolating spike numbers from subsample. MLR is robust to subsampling.

www.neuroelectro.org by .

Lots of ways to explore the collected data. Genetic microarray data coupled with electrophysiology data as an example.

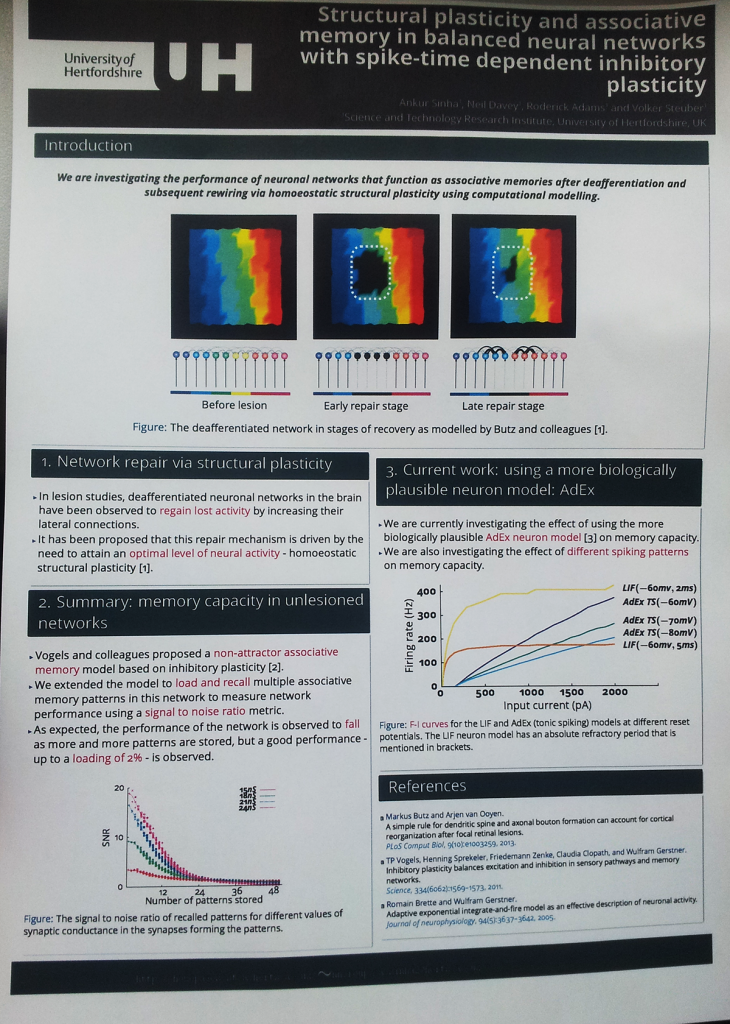

Complex synapses as efficient memory systems by Marcus K. Benna.

Why synapse is not just a number? One of important roles of the complicated synaptic connection scheme is maximizing memory capacity. Measure memory by SNR over time: the later, lower SNR after time \(\Delta t\) the less memory synapse have. Could this model serve as a replacement for LSTM or GRU gate? Not sure what is the constraint on the complexity of the model: if my task is to maximize memory why can’t I just take as complex system as I want and be done with it? Biological constraints are probably the answer, but they were not explained.

Self-organization of computation by synaptic plasticity interactions by Sakyasingha Dasgupta.

STDP as a learning mechanism once again: \(W_{i,j} \sim F_i \times F_j \), where \(F_i \) is presynaptic spikes and \(F_j\) is postsynaptic?

A model for spatially periodic firing in hippocampus by .

Place cells. Grid cells. Head direction cells.

Day 3

The Dynamics of Resting Fluctuations in the Brain by Gustavo Deco.

There is a resting state activity in the brain, thus we should not treat black-box-brain as a baseline, but should try to account for underlying processes.

Investigating the Effect of Electrical Brain Stimulation using a Connectome-based Brain Network Model by Tim Kunze.

1-2 mA stimulation with 2 electrodes. Experimented in The Virtual Brain framework. DIfferent input levels provoke different dynamics states. Not sure what the conclusion is.

Closing the Loop: Optimal Stimulation of C. elegans Neuronal Network via Adaptive Control to Exhibit Full Body Movements by Julia Santos.

Created movement in an artificial worm by stimulating the right neurons. The resulting “worm” is able to crawl forward and backward.

Day 4

Self-Organization to sub-criticality by Viola Priesemann.

Try to drive DNN to criticality and see whether performance will be better / number of neurons needed smaller?

Day 5

Change Point Detection in Time-Series by Justin Dauwels.

Gaussian graphical models. Other methods for point detection are slow (like $lattex O(n^3)$, here \(O(n)\) and \(o(n)\) are proposed. Penalized maximum likelihood method. Variational Bayesian inference.

Large-scale cortical network models with laminar structure: frequency-specific feedforward and feedback interactions by Jorge Mejias.

Large-scale model with bioplausible constraints (shouldn’t all brain models be like that?) of macaque cortex. Explains how inputs (stimuli) affect gamma and bunch of other things.

Slow Sleep Oscillations and Memory Consolidation by Maxim Bazhenov.

Sleep consolidates memories of facts and events. Slow wave sleep — declarative memory, REM — non-declarative. Wake: sensors -> thalamus -> coretx (short-term) -> hippicampus. Sleep: thalamus <-> cortex (reinforcement) <-> hippocampus.

Reconstruction and Simulation of Neocortical Microcircuitry by Eilif Muller.

Blue Brain Project. Overview of modelled structures. 86% excitatory, 14% inhibitory. They do massive simulations over morphologies and over different neuron types. Synaptic anatomy. Look like the most reasonable view on the modeling approch I’ve seen so far.

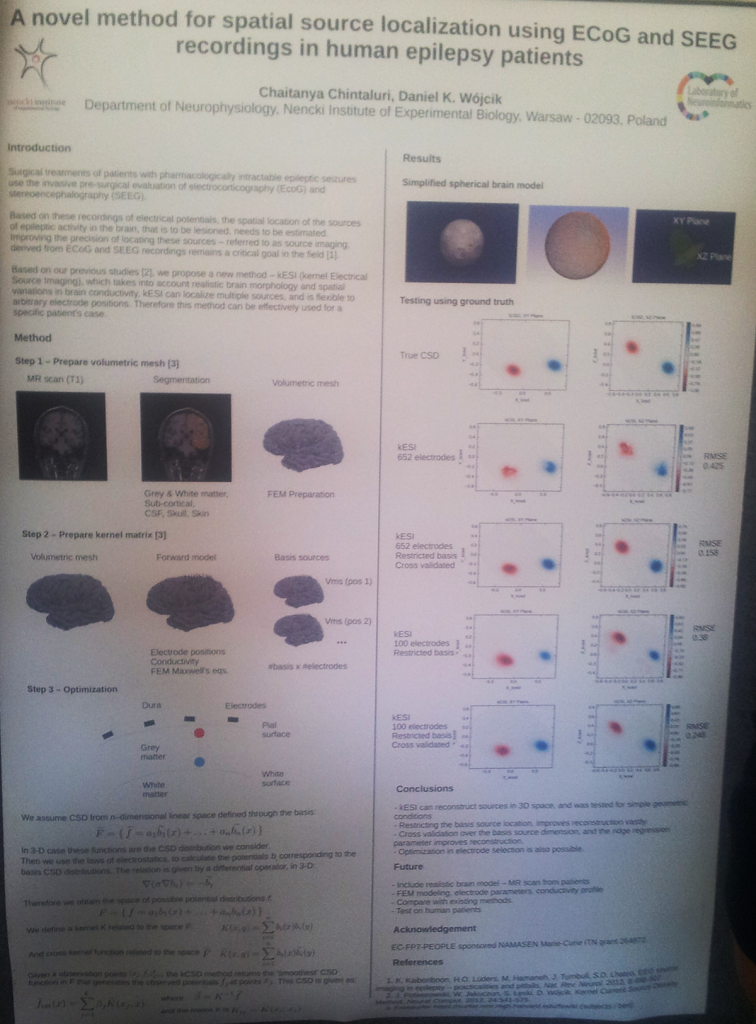

Spatial properties of evolving seizures : what we can learn from human microelectrode recordings Cathy Schevon.

Seizure localization from intracranial recordings. Utah array + ECoG grid.

Day 6

…

Posters

There was a poster about Tempotron — perceptron with time. Should look into it or this is once again a reformulation of known things into a new framework?

Poster by Michael — we can ask it from him once we want to understand the IT measure he is developing.

No comments yet.