Bernstein Conference 2014: Impressions

Here I am, at Göttingen, looking at all different kinds of neuroscientists and their research. In this post I will make notes of ideas and events while they are fresh in my memory. Note that I will mention only the things which made an impression. I will skip the ones which i did not understand or which were subjectively boring.

Day 1

Today we have workshops, which are the same old lectures, but given by very diverse speakers: from master students up to senior researchers. There are 12 parallel workshops, so one has to choose wisely where to attend. Such format gives a good possibility to get familiar with different branches of computational neuroscience.

Adaptive multi-electrode monitoring of cortical movement plans for neuroprosthetic control by Shubodeep Chakrabarti.

When you implant electrodes for intracortical (and other intra*) brain recordings you need to make it so that the end of the electrode is maximally close to the neuron (or even specific part of the neuron). Once you have placed it correctly you are happy. The problem is that there are still micromovements, which will after some time shift the electrode relative to the neuron and the signal will change (or even disappear). To deal with that experimentalists can manually adjust depth of individual electrodes. That is an OK approach if you have up to 10 electrodes, but imagine handling several Utah (96 or 120 electrodes) arrays (once they will become adjustable) in the same manner — it is not feasible.

This presentation was about a system and an algorithm, which adjusts the position of an electrode dynamically to maintain the best signal. It transforms signal into PCA space, then clusters it and then via trial and error finds the location which would maximize the distance between cluster centers and the distance from the noise cluster.

Public PhD Student Event

The evening was dedicated to Giulio Tononi and IIT. First we had a tutorial on IIT, where Giulio Tononi was trying to explain the theory. To my taste the tutorial was not successful. I think the problem is that he began from small examples, which do not reflect the big idea of IIT and therefore it seemed to imply big conclusions from naive assumptions. Lots of questions were asked, little answers received. The discussion continued with a public lecture given again by Tononi, who explained IIT once again in even more general terms and Joseph Levine, who expressed his views on the search for consciousness and commented a bit on IIT. His talk was a good example of what philosophy of science is about.

Later that day we ended up in a bar with Tononi and he had chance to dedicate more time to dodge our attacks. And I must say that after those three hours I now understand why IIT is interesting. It is a theory which is beautiful from within its bubble it can produce some interesting predictions. But as soon as you try to apply this theory to something real, it does not make sense: it becomes too counterintuitive and there is no way to confirm or falsify the claims it makes. So it is a beautiful but useless buildup, which is not usable in a scientific sense. However it has a value as an unusual way of thinking: I would say that IIT is a philosophical theory, not a scientific one.

Day 2

Workshops will continue until lunch. This time I attended

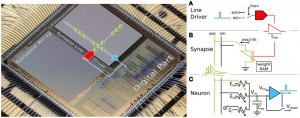

A Neuromorphic Network Built as a Hardware Chip by Thomas Pfeil.

So yes, people are doing that: there are chips, which are built as analog circuits to emulate neuronal behavior: they excite each other by sending currents and firing threshold is defined programmatically beforehand. Being an analog system it can, for example, give different output given the same input, for example if temperature of the device has changed, conductance of the elements was affected and now the propagates somewhat different. Error-correction must be an important issue there. Using modeling language like PyNN you can describe the model and them upload it to hardware. The advantages of this approach is that is it massively parallel, is perfect for modeling, because it does directly what we want it to do, is faster and power efficient (compared to simulating same network on a usual computer). Such device is Turing-complete, because you can simulate logical gates using neurons and then you can build up your universal machine using those gates, of course it is a crazy idea to do so because it will require enormous network, but for some applications (additionally to the initial purpose of neural modeling) it might make sense. Spikey (this is how this particular chip is called) is one of the many neuromorphic creations.

So yes, people are doing that: there are chips, which are built as analog circuits to emulate neuronal behavior: they excite each other by sending currents and firing threshold is defined programmatically beforehand. Being an analog system it can, for example, give different output given the same input, for example if temperature of the device has changed, conductance of the elements was affected and now the propagates somewhat different. Error-correction must be an important issue there. Using modeling language like PyNN you can describe the model and them upload it to hardware. The advantages of this approach is that is it massively parallel, is perfect for modeling, because it does directly what we want it to do, is faster and power efficient (compared to simulating same network on a usual computer). Such device is Turing-complete, because you can simulate logical gates using neurons and then you can build up your universal machine using those gates, of course it is a crazy idea to do so because it will require enormous network, but for some applications (additionally to the initial purpose of neural modeling) it might make sense. Spikey (this is how this particular chip is called) is one of the many neuromorphic creations.

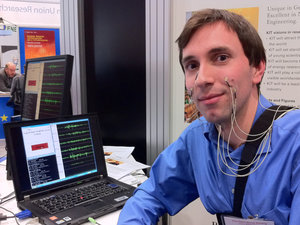

Decoding Silent Speech using High-density Myoelectric Signals by Michael Wand.

And another application of machine learning to physiological signal. This time EMG electrodes are placed on the face of a test subject. He can talk without producing any sounds (but still moving all the muscles) and the system will transform this activity into text in real time. Current performance on 2000-word dictionary has error rate of 13% (usual speech recognition goes with error rates around 5%).

And another application of machine learning to physiological signal. This time EMG electrodes are placed on the face of a test subject. He can talk without producing any sounds (but still moving all the muscles) and the system will transform this activity into text in real time. Current performance on 2000-word dictionary has error rate of 13% (usual speech recognition goes with error rates around 5%).

Neuromorphic engineering: from brains to silicon implementations by Giacomo Indiveri.

What is the next step from the neuromorphic circuits being developed now? Once technology is ready (reliable, scalable, etc), how should we move forward? The idea here is to make artificial behaving systems to be good at the same set of tasks we (humans) are good at (opposed to being good at what computers do well). Let me call first type as computation oriented and the second one as behavior oriented.

There are already commercial systems among computation oriented neuromorphic chips: IBM TrueNorth — is a 1 cm2 chip designed for solving practical problem where neuromorphic architecture would make sense.

The system presented here has quite different goal: it tries to achieve a decent performance in real-time under restrictions our brain has:

- time is not accelerated

- noise is important

- fault tolerance (up to 30% of nodes can die off and system still works)

- energy and heat efficiency

Limiting an artificial system in the same way as we (humans) are gives a natural boundaries, within which we can try to simulate brain: restrictions will hint us which way to go and forbid us form developing neural codes and neural mechanics which cannot be physically supported by out brain’s machinery.

The natural question to ask here is “Okay, how well does it work?”

- Learned to recognize a fixed pattern on a image. That implies that you can do machine learning, because what network actually does is just projecting your input into high-dimensional space. Role of the noise now works as stochasticity in Bagging and Random Forest.

- Attractor networks

- Winner-Take-All networks

- From a screen show moving objects and network does a complex decision making. Finite state machine.

And remember — all of these work on same speeds as our brain.

Possible application to BCI — intelligent non-heating electrodes.

And after that the conference itself began. It started by usual welcome word from different important people and so on. Opening lecture was interesting and inspiring, but as you can see I have no notes on it.

Day 3

Sensory Coding/Decoding

Self-organized learning and inference explain key properties of neural variability by Christoph Hartmann

Trial-to-trial variability is a problem. We know that, we average. How much of it one can explain if we assume there is no noise at all. Predict spikes from pre-stimulus image. Noise can be seen as something, which has underlying structure. Noise is still there, but not all of it. Hard to compare to the real world data.

Optical Compensation for Neuron Death by Christian Machens

Neural systems are robust against damage. We know that. But why they are? Compensate not by changing weights, but by changing the firing rate of the neurons which are alive. Linear combinations are redundant, so you can get same values most cases. Define loss function for redefining behavior as error + penalization for firing rate. By solving you get and vector of firing rate updates, which minimizes the loss. You can remove bunch of unknowns (firing rates of neurons which died) and still find a solution which gives same representation.

- Subtract own readout from the input. Predictive coding network.

- See spikes as changes in the readout error space and then purpose of a spike is to make distance between network’s and actual representation smaller (decrease error).

- Trial-to-trial variability would be then explained by the redundancy — you can represent same curve with lots of different spiking sequences.

Add constraint that firing rate must be positive. Then solving a system is solved as quadratic programming problem. Again we have lots of solutions (but remember, that we have ignored cost of firing). Piecewise linear shape. Adding costs helps you choose which solution makes sense (because cost of firing is given via rules of physical world).

Quite a beautiful way to represent properties of a network with ability reorganization. And it has some examples of similar behavior in experimental works.

Neuroprosthetics

Machine listening and computational auditory neuroscience models – a relation with mutual benefits by Bernd Meyer

Filter bank of Gabor Features is used in audio signal processing. Is it applicable to our gamma projects?

Restoration of Sight with Photovoltaic Subretinal Prosthesis by Daniel Palanker

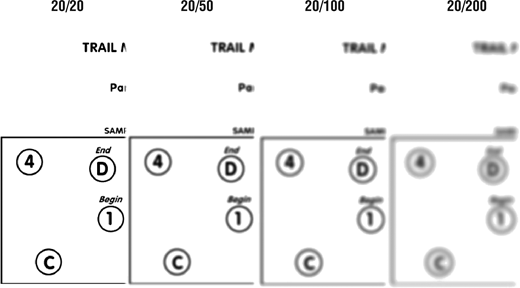

Usual human’s acuity is denoted as 20/20. The first number is the distance (in feet) from which a human can distinguish contours which are 1.75mm apart. Second number is the distance from which a normal person would see as bad as impaired person from the first distance. For example if you have an acuity 20/40 it means that what you can distinguish only from 20 feet, normal vision will be able to distinguish from 40 feet.  Here on the picture you can see how different acuities could look like. You are still allowed to drive a car with up to 20/50. The research presented in this talk is about dealing with impairments caused by retinal damage (so if problem is there, you can replace it with the electrode array shown in the video).

Here on the picture you can see how different acuities could look like. You are still allowed to drive a car with up to 20/50. The research presented in this talk is about dealing with impairments caused by retinal damage (so if problem is there, you can replace it with the electrode array shown in the video).

The measure of acuity in monkeys was done using moving grating: by decreasing the size of strides and noting the moment when neurons stop reacting on the movement of a grating (that means that subject does not distinguish strides). As you remember the usual acuity is 20/20. The naive solution which exists out there can give you something like 20/1200 (not very usable). The best result before their system was 20/550. With their system one can achieve acuity of 20/256 and with training raise it up to 20/200.

They are going commercial soon.

Adaptive feedback control of pathological oscillations in spiking neural networks by Ioannis Vlachos

Currently the Parkinson’s symptoms can be “cured” by stimulating certain areas with a fixed (hardcoded) frequency pulse. In this talk a closed-loop scheme is proposed, which can be more efficient by monitoring the neuronal activity and dynamically adjust the rate of stimulation. The talk demonstrated an idea of suppressing undesired activity in arbitrary spiking neural network (SNN).

The Extraction of Neural Information from the Surface EMG for the Control of Upper-Limb Prostheses: Emerging Avenues and Challenges by Dario Farina, Sebastian Amsüss

The goal is to control a robotic arm using EMG. Muscles serve as an amplifier of a neural signal. We can try then to estimate patient’s (with missing upper limb) intentions from muscle activity. Motor task estimation is done using machine learning. The concept itself is old (around 40 years) and works well even with simple algorithms. Nowadays robotic arms are very good in terms of precision and the number of actions they can do. The problem is in controlling all those available degrees of freedom. It is possible to achieve high accuracy rates (95%+) for 8 or even 10 degrees of freedom. The problem is that switching between them is not an intuitive operation and it slows down the speeds at which the prosthesis can be operated. People with healthy hand do not think about using degrees of freedom, they just combine them naturally. And this natural way of controlling more than one degree of freedom simultaneously is the question researchers are trying to solve. The solution uses two machine learning models: one to decide which action (out of 8 or 10) patient wants to activate and second algorithm decides in which degree of freedom the arm should operate at this particular moment. This solution works and makes it possible for the patient to switch between degrees of freedom without explicitly thinking about it.

One step forward is to extract motor neuron activity form muscle activity and use that information in a machine learning models.

There were two poster session, there is no way to give a brief descriptions about that. Some posters were about almost pure machine learning and information theory and even a couple about brain-computer interface.

During a satellite public lecture a humanoid kitchen robot was demonstrated.

Day 4

Neural Control of Action Selection

Neural mechanisms for making decisions in a dynamic world by Paul Cisek.

Environment always change. You can see the decision making process as generating some distribution over how desirable each of all possible actions is and acting upon the most desirable one. What is the neural mechanism behind this process?

- Attention goes from visual areas to cortex and different areas in sensorimotor system start a rivalry for controlling subcortical structures.

- The situation updates continuously

How does the brain compute the biases in favor or against actions in a changing environment?

- Classical model of integration of inputs and thresholding is too slow (return back to middle points and only then adapt)

- What if integrate only new information?

- And also let’s restrict it with a notion of reward.

Urgency gating model says:

- Actions go into sensorimotor and have a competition there

- Urgency signal comes from basal ganglia

- Experimental design to predict the (actual) probability at which monkey is ready to make a decision. Confirm that on easy tasks monkey is ready to commit with a lower level of confidence.

- With longer time the evidence (inside monkey’s brain) drops. Descent begins in 1 to 1.5 sec time window. UGM predicts the shape of the curve of evidence level over time.

- Commitment occurs at a critical contrast between PMD cells.

- Stimulation close to the moment of commitment disrupts is and pushes forward time.

- Larger reward leads to more risky (earlier) decision commitment.

You can look at decision PCA-space, see how objects are oriented there and draw interesting parallels between principle components and concepts related to decision making.

That’s it, now it is time to go and walk in the city.

Comments (1) for post “Bernstein Conference 2014: Impressions”